DATA/FFECT

“What’s on your mind?”

“What’s happening?”

Wherever you go on social media, you are prompted to disclose how you feel about an endless stream of content through likes, reactions, and text. No matter how you feel, these responses are fed back to you as algorithmic recommendations meant to keep you scrolling. To find our way out of these feedback loops, we need more than different questions.

Abstract/Overview

The Image-Affect tool gives you the tools to answer differently, offering a simple interface to express and interpret the many layers that constitute how you feel online. We start with a simple question:

'“How are you feeling?”

“Fine” “Okay” “Meh”…

In many ways, you need to be a poet to answer such an open prompt. At any given time, we feel so many things altogether - fearful yet hopeful, depressed and angry, happy yet sad…. these combinations always change. We offer a tool that embraces our feelings' many layers and complexities.

#Affect

#Affect

#Dis-information

#Dis-information

#Algorithmic Recommendation

#Algorithmic Recommendation

Whether we notice it or not, we are constantly manipulated by corporate social media. Social media entertain us, inform us, and overwhelm us with never-ending flows of recommendations for new things to like and new ways to hate. Bombarded by content from bots and friends, agitprop actors and fake accounts, we end up in echo chambers, resonating with feelings without the means to express them. Like/Dislike, Happy/Angry, 🤣/ 😿, these are the crude tools available to us as we yo-yo between FOMO and digital exhaustion.

To get us scrolling no matter how we feel, social media has to know what makes us react in certain ways. What kinds of content will make us angry, or happy, or feel connected, or disconnected? What are our triggers?

Affective computing is the umbrella term for the many techniques used by social media to refine these emotional triggers. It uses emotion recognition and sentiment analysis to constantly collect data about ourselves and about how we react - our posts, emoticons, hashtags, likes, and so on. With AI, these systems not only analyze what we feel, they anticipate how we will feel and push us toward whatever feelings will be best for the platform. To fight mis- and disinformation, we must recognize that these systems do not care if their content is harmful as long as the feelings they generate keep us online. In our post-truth present, it is important to remember that sometimes the truth hurts.

Countering Sentiment Analysis

If the affective computing industry gives any indication, social media posits that there exists a set of dominant feelings that can be extracted, catalogued and triggered by specific kinds of content. Image-Affect identifies and responds to a major flaw in this perspective. No one emotional state can ever fully capture the complexities and contradictions of feelings. Sometimes it feels good to feel bad, sometimes it is bad to feel good. Oftentimes we feel more than one emotion at a time. The affects that flow through us are multiple and changing. From one day to the next, the same content might resonate differently, so why should one emotional state define what we are allowed to feel?

As we are constantly bombarded with content collated from an algorithmic profile of what we are expected to feel, we never get the time to fully experience our feelings. We are rushed to add a like and swipe to the next thing. The accumulation of existing but not fully experienced affects often reveals itself through a profound sense of disconnection and tiredness. It is simply too much to cope.

Our goal, then, is to present an anti-sentiment analysis tool, one that pries open the patterns that restrict and redirect our feelings online while providing users with the means to map their affects without engaging in any form of manipulation or extraction. The interpretations of these data are then left to the user to visualize and share on their own terms.

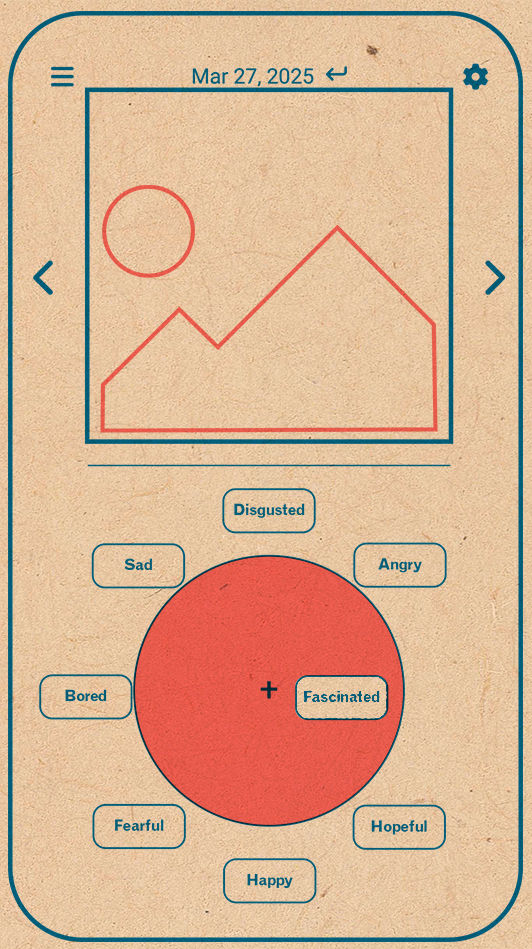

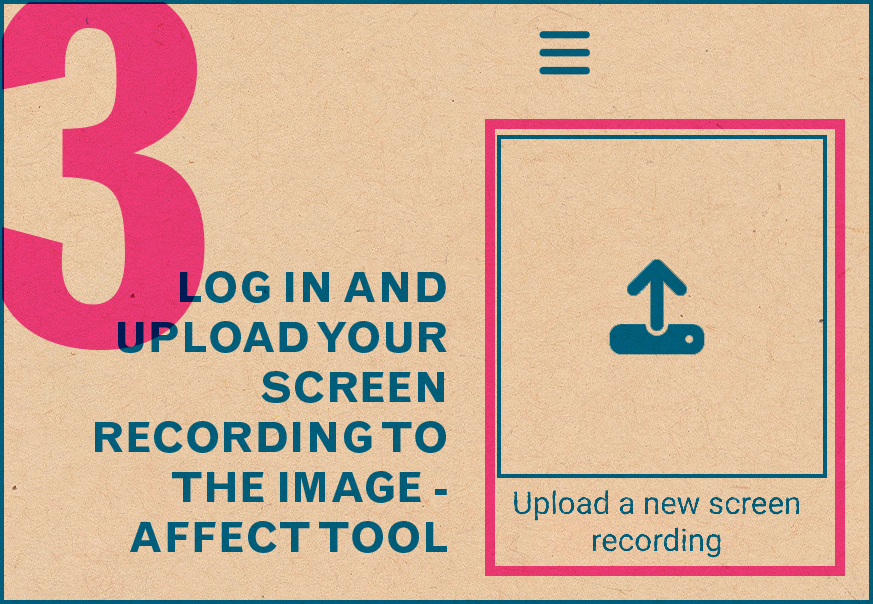

The Image-Affect Tool

The Image-Affect tool allows you to upload recordings of your scrolling sessions. It extracts images from this recording and provides you with a wheel of affects and a sliding scale of intensity. For each image, you can drag and drop your affects - the closer to the centre, the more intense the affect. You can add as many affects as you want or leave the wheel empty. You can come back and change your mind. You can lie about how you feel if you want. Only you can understand what you were thinking and feeling as you were tagging. Only you can reassemble the data you create as a meaningful interpretation of your feelings. We invite you to be playful and trust your intuition - you might realize that what you actually feel is very, very different from what you think you should feel.

Affective

Landscapes

Typically, the power to visualize and interpret the data generated by our affective experience online is withheld from social media users. A monopoly of the platform, the archive of affects recorded through sentiment analysis and affective computing define how your data are valued and fed back to you as algorithmic recommendations. Image-Affect remediates this process, offering users a toolset to aggregate and analyze the data catalogued by uploading and tagging content.

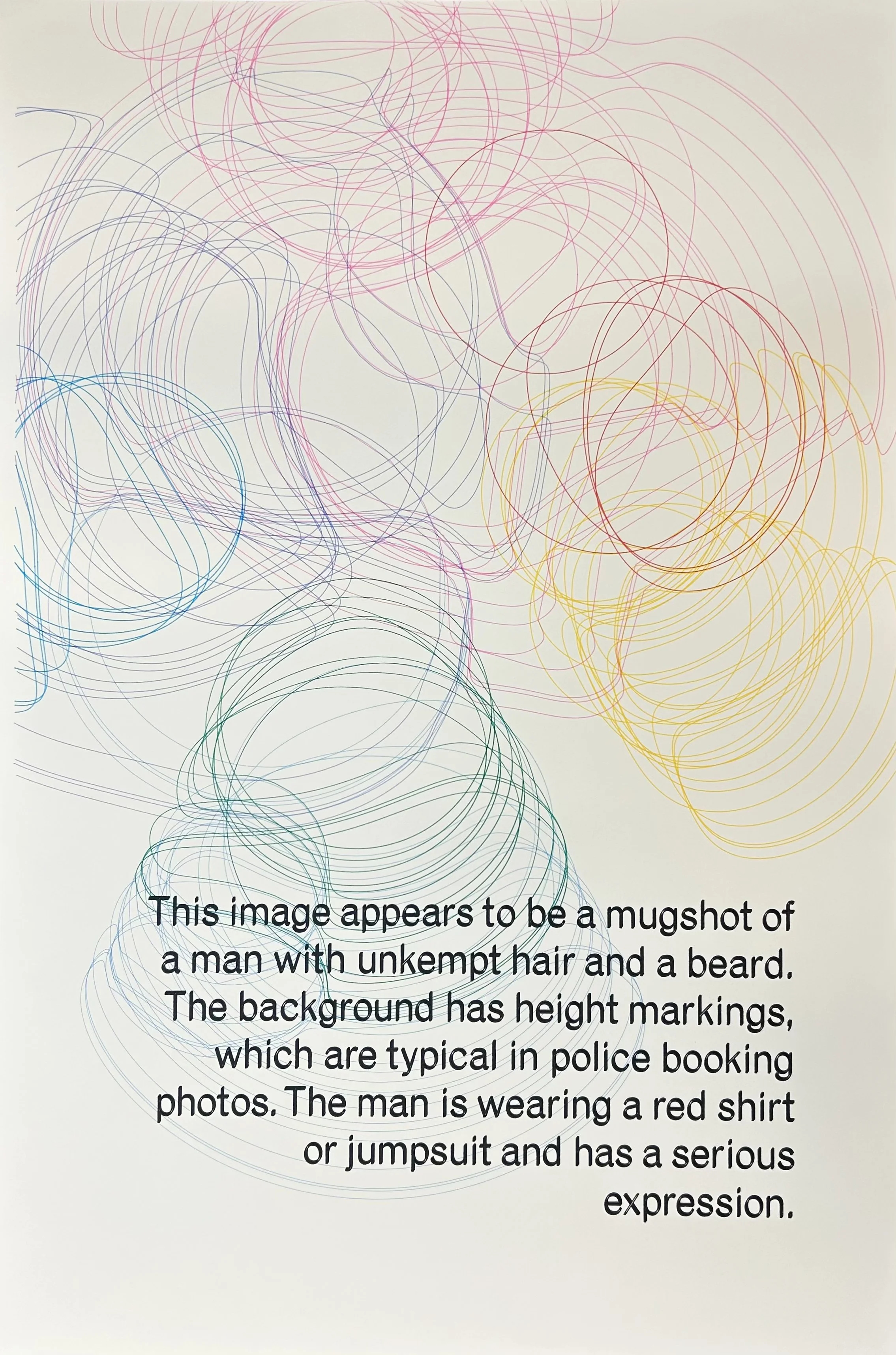

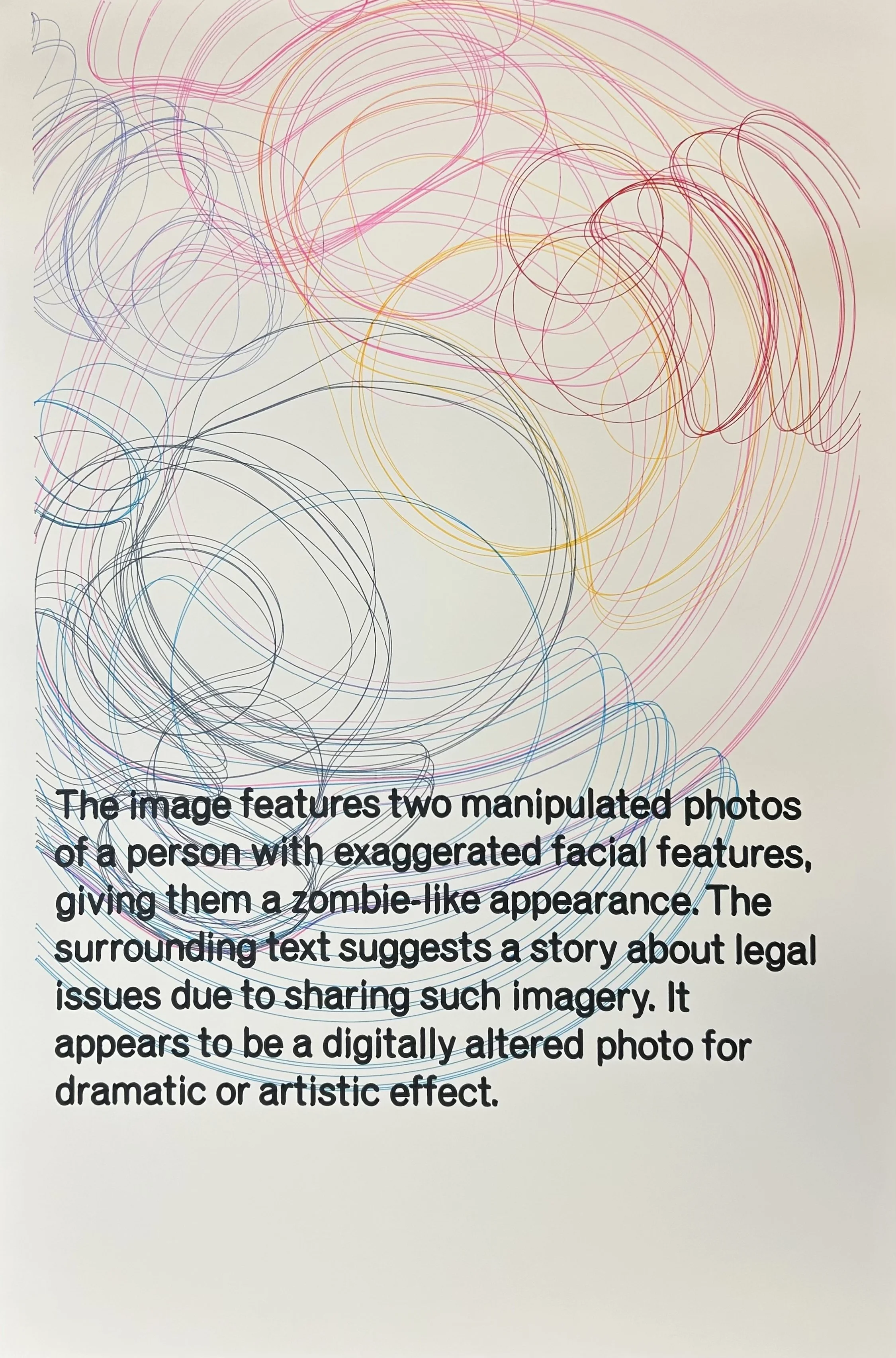

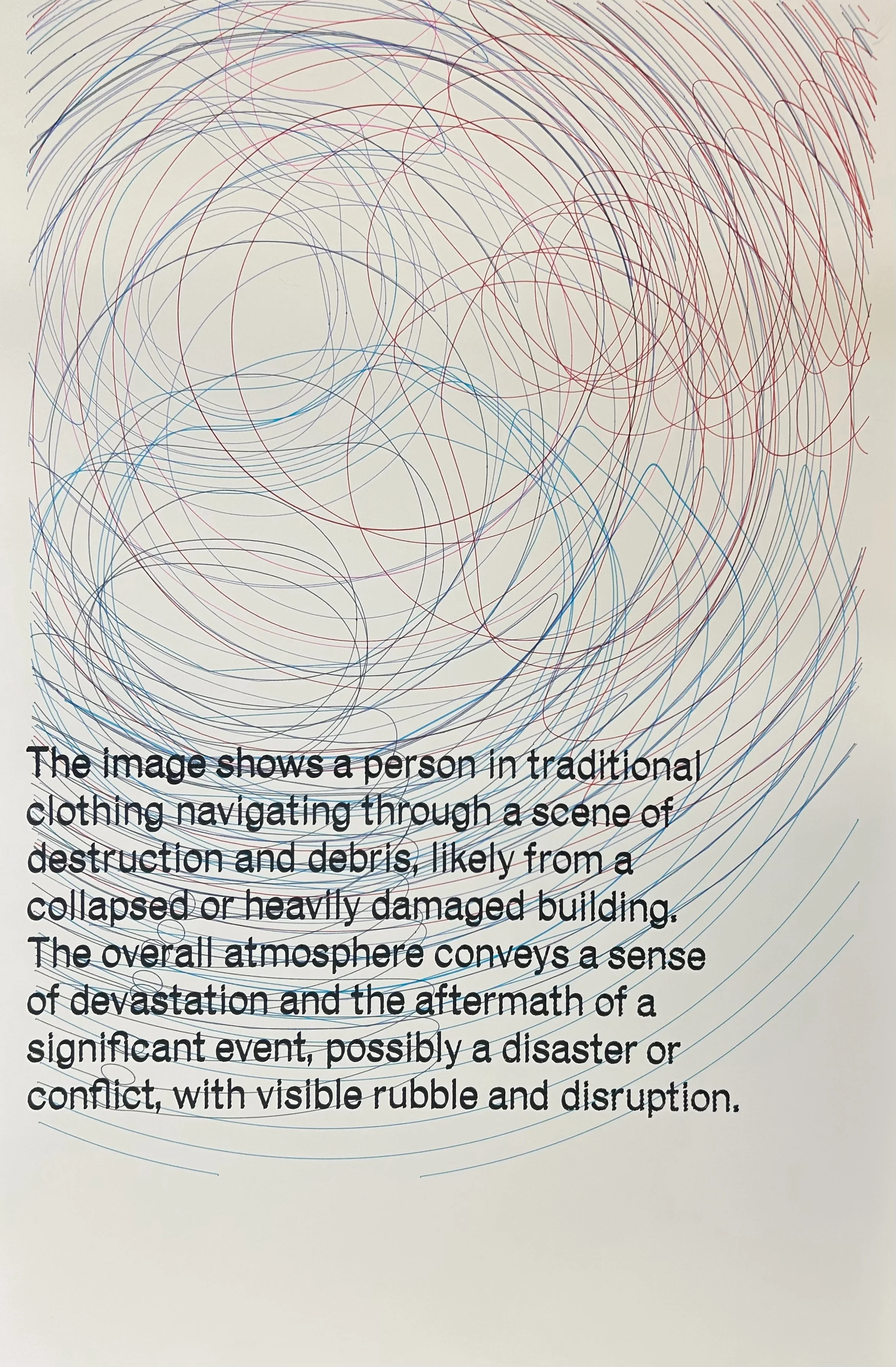

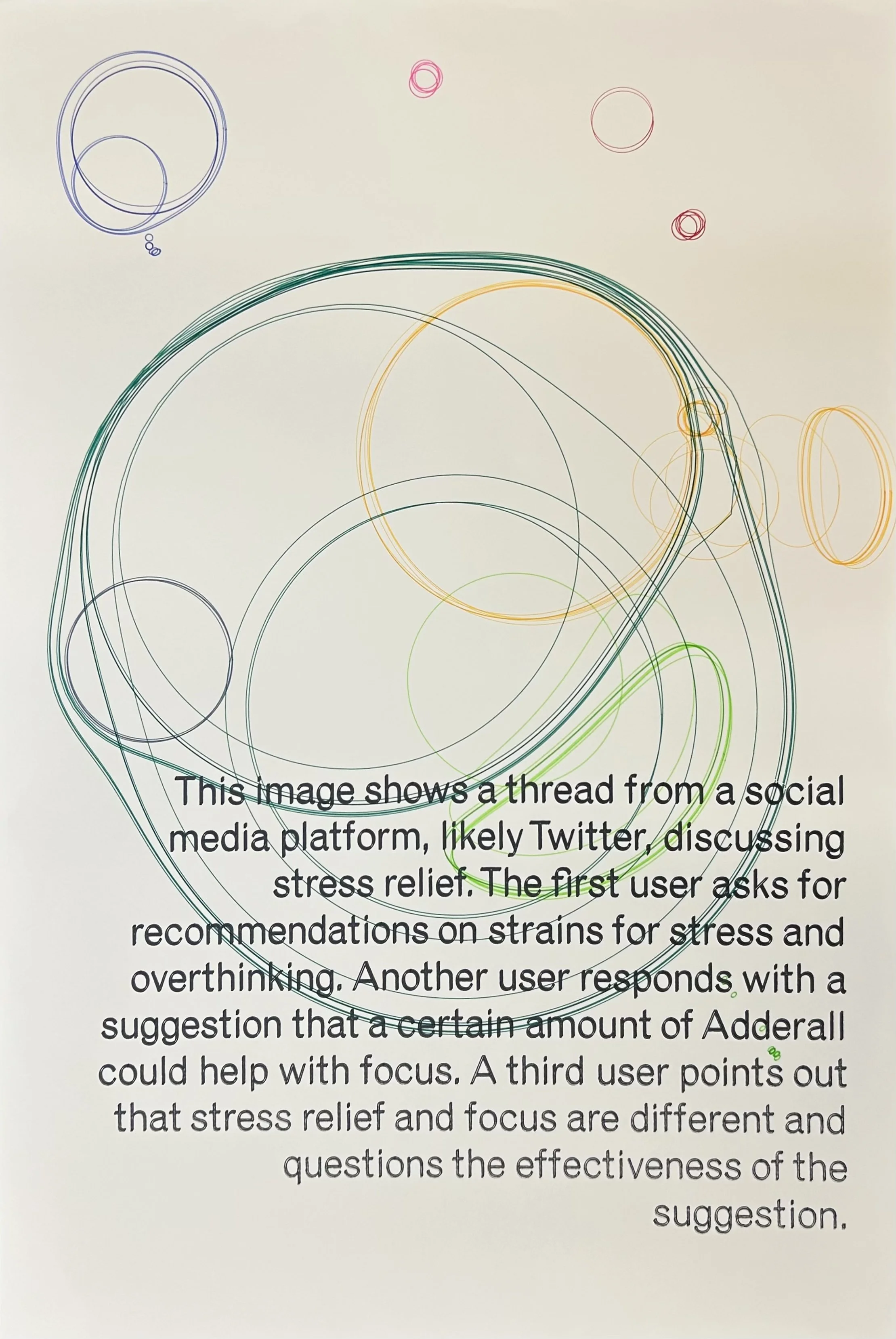

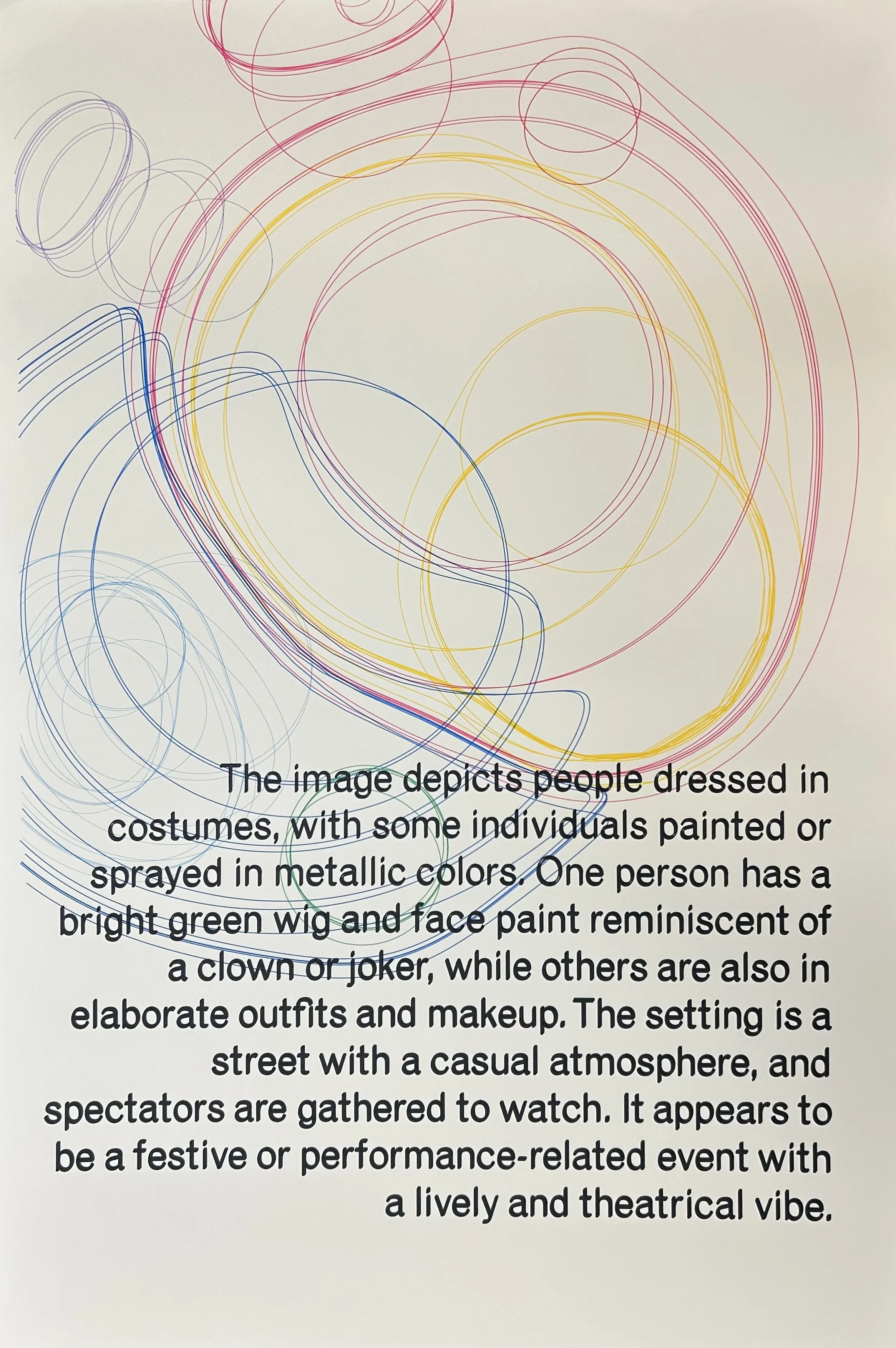

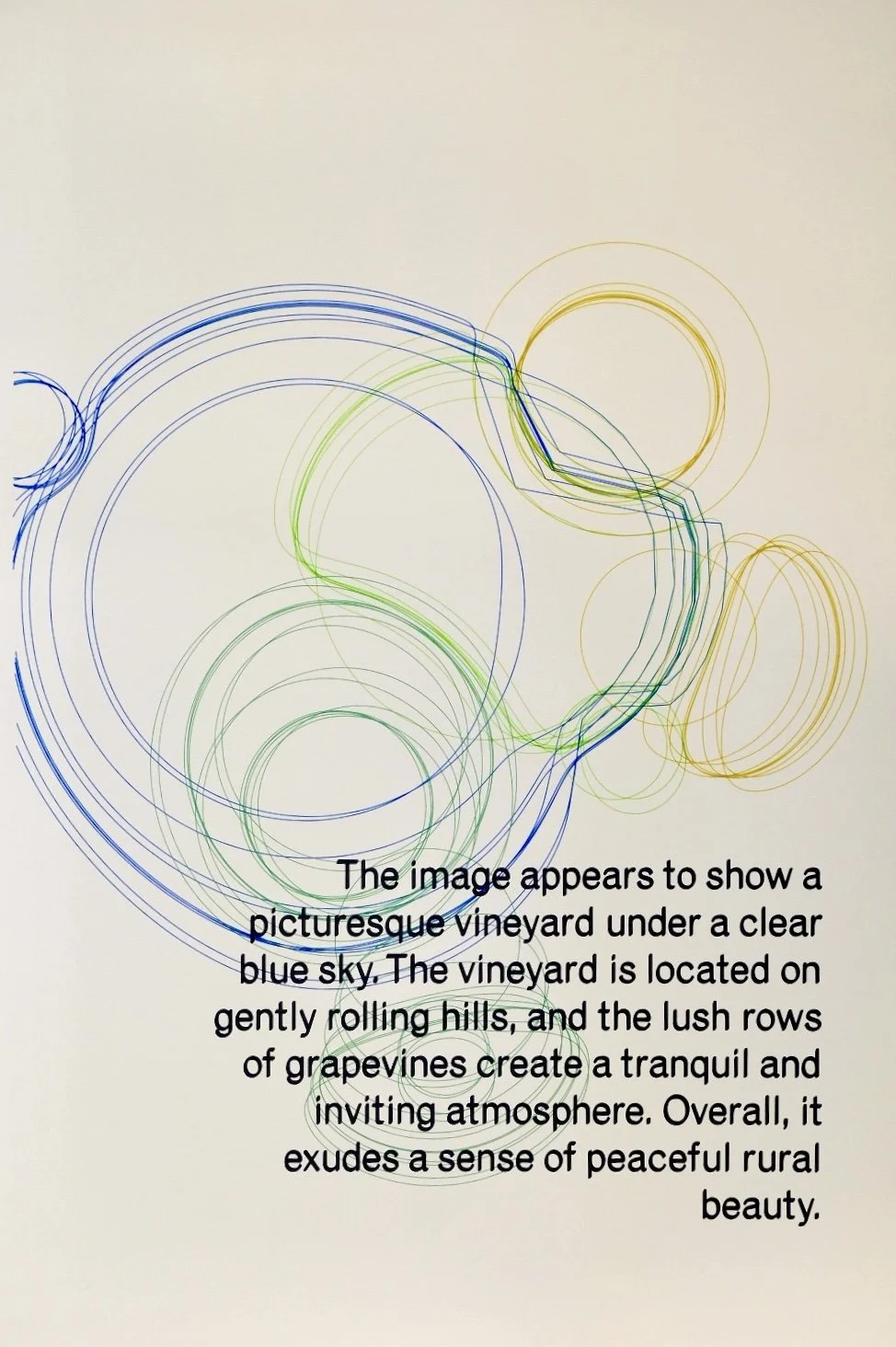

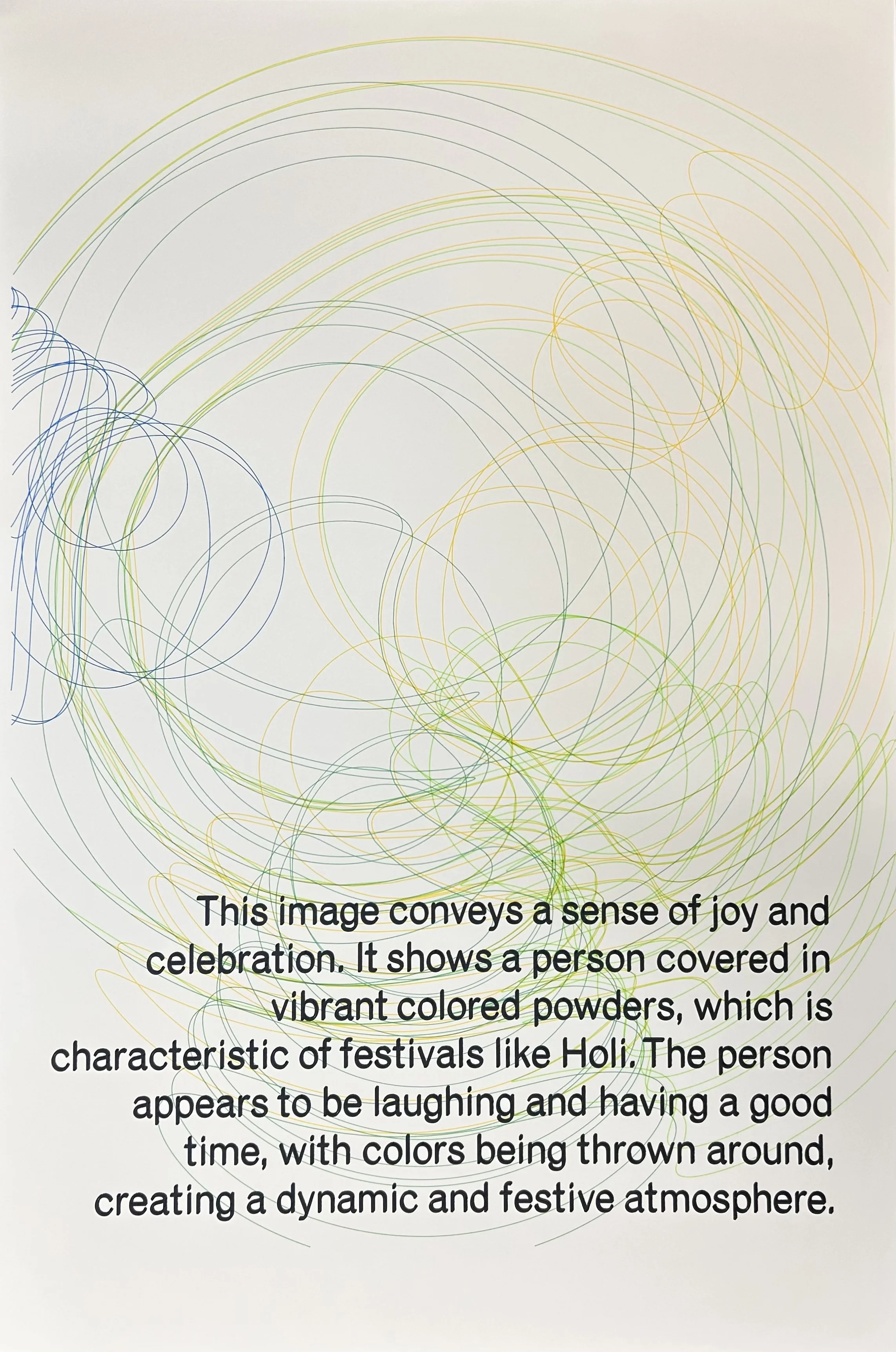

Through Affective Landscapes, the act of tagging affects is visualized as a colorful, abstract field, tracing both the qualities of the tagged affects and their respective intensities. These images prompt you to consider the complexities of your feelings beyond the categories of sentiment analysis. You may see traces of past enjoyment or a swirling sea of dissatisfaction, but your interpretation of these data is yours alone.

Process and Documentation

Data collected through an initial trial of the Image-Affect tool was used to generated Affective Landscapes visualizations using a custom Pen Plotter control library. This process yielded a series of drawings, which were displayed at Vancouver’s Or Gallery in May and June of 2025 for the Data Fluencies: Tributaries exhibition. These drawings were captioned using a LLM-based image captioner, which was fine-tuned to provide the algorithm’s “gut feeling” about an image. This caption is contrasted with the user-contributed data, rendered as interacting flows of affect.

Credits

Thank you to the Image-Affect software development team: Matt Canute, Mel Racho, Shane Eastwood, Craig Fahner, Nora Liu, Chancellor Richey, Eli Zibin

DATA/FFCT is Anthony Burton, Matt Canute, Craig Fahner, Ganaele Langlois, Mel Racho and Rory Sharp.

This project is part of the Data Fluencies Project (2022-2025) hosted at the Digital Democracies Institute at Simon Fraser University.